Audio Indexation

From HLT@INESC-ID

The last years show a large demand for the monitoring of multimedia

data. A good example of this multimedia data is Broadcast News (BN)

streams. Every day thousands of TV and radio stations broadcast many

hours of information (news, interviews, documentaries). In order to

search and retrieve information from these massive quantities of data

it is necessary to have it stored in a database, and most important,

it has to be indexed and catalogued.

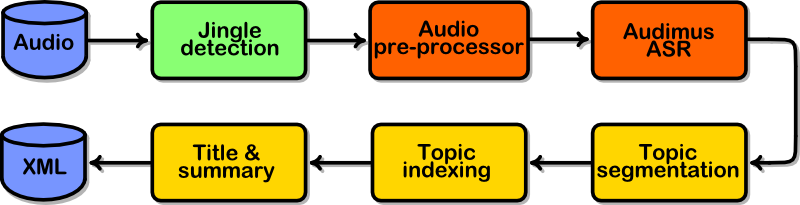

Audio stream generated is processed through several stages that successively segment, transcribe and index it, compiling the resulting transcription and

metadata information into a XML file. The stages are:

- Jingle detection

- Audio pre-processor

- Automatic Speech recognition (AUDIMUS)

- Topic segmentation and indexing

- Title and summary

- Generate transcription XML file with all metadata information

Jingle Detection

First the recorded audio file is processed by the {\it Jingle Detection} module (see section~\ref{sec:jd} ahead for a detailed description) which identifies the precise news show start and end times identifies certain portions of audio that are not relevant to the story (news fillers) and detects commercial breaks inside the news show. Based on these time instants a new audio file is generated with only the relevant contents of the news show.

Audio pre-processing

The new audio file is then fed through an Audio Pre-processor module (see chapter~\ref{chapter:audio_pre_processor} for a detailed description). This module outputs a set of homogeneous acoustic segments discriminating between speech and non-speech. The speech segments contain audio from only one speaker and have markups concerning the background conditions, the speaker gender, and speaker clustering.

Speech recognition

Each audio segment that was marked by the Audio Pre-processor as containing speech (transcribable segment) is then processed by the Speech Recognition module (see chapter~\ref{chapter:speech_recognition} for a detailed description).

Topic segmentation and indexing

The Topic segmentation module groups segments to define a complete and homogeneous story. For each story the Topic Indexing module generates a classification, according to the hierarchically organized thematic thesaurus, about the contents of the story~\cite{2003_MSDR_Amaral, 2003_PROPOR_Amaral, 2003_NDDL_Amaral}.

Title and summary

Finally a module for generating a Title and Summary is applied to each story. Since we will deliver to the user a set of stories, we would like to have a mechanism to give a close idea about the contents of the story besides the topic indexing~\cite{2003_CBMI_Neto, 2004_ACUSTICA_Trancoso}.

Generate transcription XML

After all these processing stages an XML file containing all the relevant information that we were able to extract is generated according to a DTD specification~\cite{2003_MSDR_Neto, 2003_CBMI_Neto}.